In This Issue

- Cybersecurity Spend

- Obama: Pandemic

- Need United Effort

- Report from the CEO

- Edge Computing

- Gaining an IoT Edge

- Telefonica Big Data

- DarkMatter & NetApp

- Innovation Begins

- Face of Hybrid Cloud

- End-to-End Resiliency

- Return of Fragmented

- Edge Computing: Rev

- Machine Learning & AI

- Escaping Mainframes

- Cloud and Fog in IoT

- Coming DCIA Events

Cybersecurity Spend to Top $100 Billion by 2020

Excerpted from ZDNet Report by Larry Dignan

Enterprises are going to spend heavily on security hardware, software, and services as they race to avoid becoming the next big cyberattack victim, says IDC.

Revenue for the information security industry — hardware, software, and services — will top $100 billion in 2020 as enterprises spend heavily to fend off cyberattacks.

IDC is forecasting that global revenue for security technologies will grow from $73.7 billion in 2016 to $101.6 billion in 2020, or at a compound annual growth rate of 8.3 percent.

That growth rate is more than twice the forecasted IT spending over the next five years.

Funny how all that money isn’t exactly making me feel any more cybersecure.

After all, data breaches are an almost daily occurrence. Nevertheless, the security industry is blessed since no tech buyer would ever want to be accused of scrimping on protecting infrastructure.

IDC analyst Sean Pike noted that enterprises fear becoming the next cyberattack victim… Read More

Obama: Cybersecurity Is Like Fighting a Pandemic

Excerpted from Washington Post Report by Andrea Peterson

After facing an unprecedented wave of cyberattacks against private and public organizations during his presidency, President Obama thinks about digital threats like a public health crisis, he said in a Wired Magazine interview published Wednesday.

Instead of approaching cybersecurity as a traditional battle, he thinks about defending systems as if preparing for a pandemic. He said:

Traditionally, when we think about security and protecting ourselves, we think in terms of armor or walls.

Increasingly, I find myself looking to medicine and thinking about viruses, antibodies.

What I spend a lot of time worrying about are things like pandemics.

You can’t build walls to prevent the next airborne lethal flu from landing on our shores.

Instead, what we need to do is create public health systems in all parts of the world, click triggers that tell us when we see something emerging, and quick protocols and systems to make vaccines smarter.

This metaphor lines up with mantras that many security experts have been repeating… Read More

Raskin: Biz & Gov Need United Cybersecurity Effort

Excerpted from Wall St. Journal Report by Tatyana Shumsky

Cyberthreats to financial institutions have transformed into a global problem that requires a united effort from government and business, according to Sarah Bloom Raskin, deputy secretary of the US Department of the Treasury.

While government cyberprotection efforts have become more rigorous and proactive, rather than reactive, in recent years, partnering with the private sector is essential to their success, Ms. Raskin told The Wall Street Journal’s Financial Regulation Conference.

“It’s something that the government can’t do alone,” Ms. Raskin said.

In recent years periodic attacks on a bank’s computer security perimeter have been replaced by regular attempts at stealing the financial institution’s “crown jewels,” Ms. Raskin said.

“The goals have changed. It’s not so much nuisance, but misappropriation,” she said.

The Treasury Department has collaborated with financial institutions to set up information sharing mechanisms and identify tactics used in the attacks, she said. A hacker frequently uses the same technology, methodology, and internet addresses… Read More

Report from DCIA CEO Marty Lafferty

Two questions I often hear are, “How do fog and edge computing relate to cloud computing?” and “What’s the difference between fog and edge computing?”

Two questions I often hear are, “How do fog and edge computing relate to cloud computing?” and “What’s the difference between fog and edge computing?”

It’s helpful to begin with a definition of cloud computing, which continues to be our industry’s leading growth driver as more and more private and public sector organizations of all sizes migrate “to the cloud” for more efficient management of business and technical functions.

Cloud computing can be defined as a group of computers and servers connected together over the internet into a cohesive network for sharing storage and compute resources, including programs and applications — with its basic end components remote computing devices and centralized data centers.

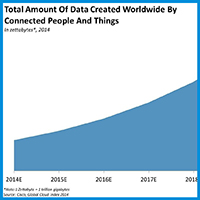

The second most exciting trend in our industry, the internet of things (IoT), serves as a driver for both fog and edge computing, which can be considered extensions of cloud computing.

The IoT is adding many more remote connected devices generating enormous amounts of new data that must be accessed quickly by other computing resources to perform value-adding functionality.

Both fog computing and edge computing are architectures that respond to this need by pushing intelligence and processing capabilities out of centralized data centers and down closer to where the data originates – IoT sensors, relays, motors, etc.

The key difference between the two is where that intelligence and computing power is placed.

Fog computing pushes intelligence down to the local area network (LAN) level, processing data in an IoT gateway, also known as a “fog node.”

Edge computing pushes the intelligence, processing power, and communication capabilities of an “edge gateway” appliance even further down the data chain directly into IoT devices themselves.

Edge computing actually is an older term, describing a layer of computing at the edge of the network to process data for more efficient transport to the cloud, while Cisco is credited with creating the term fog computing, essentially to describe its mastery of secure transport for such data.

With fog computing, data from a control system program is sent to a protocol gateway, which converts the data into a protocol that internet systems understand, then sends it to an IoT gateway on the LAN, which performs higher-level processing and analysis; this system filters, analyzes, processes, and may even store the data for transmission to the cloud at a later date.

Edge computing simplifies the communication flow and reduces potential points of failure by wiring IoT end devices into an intelligent protocol gateway to collect, analyze, and process the data they generate while also running the control system program; programmable automation controllers (PACs) then determine what data should be stored locally or sent to the cloud for further analysis.

In edge computing, intelligence is literally pushed to the network edge, where physical assets are first connected together and where IoT data originates.

With fog computing’s additional components, greater scalability and externally managed security measures can be supported.

There’s much more to it, but this offers a basic starting point for your further exploration. Share wisely, and take care.

Edge Computing: Lower Data Costs and Cut Inefficiencies

Excerpted from Clear Sky Blog by Courtney Pallotta

Most companies, especially those accountable to shareholders, probably wouldn’t want to be known for living on the edge.

But in the case of data access and management, the edge – more specifically, edge computing – makes a lot of sense.

With an edge computing approach, data is stored in colocation sites in regional areas so that it’s close to end users who need to access the information.

This kind of IT architecture, in which data storage is part of a powerful network, avoids the problem of latency across long distances.

Highly transactional workloads often don’t perform well when they’re separated by a long distance from users.

Edge computing is particularly valuable in today’s business environment, with massive volumes of data constantly being generated by people and machines.

Placing such data in the cloud can be complex, accessing it can be expensive and the process can hinder IT performance.

But, on the other hand, keeping data local doesn’t work because there’s too much data to manage… Read More

Gaining an “Edge” in IoT

Excerpted from Computer World Report by Jack Gold

Estimates suggest that in the next 4-5 years, 30 billion to 50 billion “things” will be deployed.

Estimates suggest that in the next 4-5 years, 30 billion to 50 billion “things” will be deployed.

These devices will range from relatively simple sensors (e.g., temperature, pressure, rain) to complex data collectors (e.g., health monitors, shipping/transportation monitors, chemical process monitors, environmental stations).

Many will be cost sensitive “throw away” devices deployed in huge numbers to consumers.

But many will be high value devices, particularly in Enterprise of Things (EoT) applications, and in mission critical situations (e.g., health care, autonomous driving, process control, security, smart cities) where many companies are still formulating their strategies.

I don’t expect most IoT devices to be simple data gathers that send collected information to the cloud for analysis and action.

I expect the majority of IoT devices to include significant processing capability to enhance their functionality and to maximize the true potential of IoT… Read More

Telefonica Launches Transformational Big Data Unit

Excerpted from Report by James Nunns

Telefonica is hoping to tap into the buzz around the big data market by launching its own services unit.

LUCA, the big data unit, will offer a portfolio of services to cover the requirements of all kinds of organizations to advance their transformational journey and benefit from the opportunities presented by big data, the company said.

Chema Alonso, Chief Data Officer of Telefonica, said:

“Data is a critical asset for the future of Telefonica and any organization. With Telefonica’s fundamental promise to always maintain privacy, security and the transparent use of the data we want to help our clients understand its full potential.”

The company believes that LUCA will be able to Help its customers with their decision making, more efficient resource management, and in returning the benefits of the wealth of information to both users and society.

The unit, which will be led by Elena Gil, global big data B2B director, Telefonica, will have three main lines of products and services; Business Insights, analytics and external consultancy services, and Big-Data-as-a-Service (BDaaS).

The Business Insights area is said to include services such as Smart Steps, which are focused on mobility analysis… Read More

DarkMatter & NetApp Partner to Drive Data Management

Excerpted from DarkMatter Press Announcement

DarkMatter, an international cyber security firm headquartered in the UAE, and NetApp, a leading global data storage and management company, have announced a partnership to jointly develop and deliver secure data storage and big data analytics solutions.

With regional government and enterprise users increasingly looking to leverage big data analytics to drive business and operational improvements, large public and private cloud data storage solutions are required, which not only integrate seamlessly with their business processes, but also demonstrate the highest levels of security and cyber threat mitigation.

With advanced persistent threats on the rise both globally and in this region, security is particularly urgent in the data environment — all the more so given the regular announcements of increasingly large and damaging data breaches across industries.

Through this agreement, customers in the Middle East will benefit from DarkMatter’s implementation of resource-efficient and scalable NetApp technologies that quickly ingest and analyze data, thereby empowering end-users with actionable insights in a predictable and efficient manner… Read More

Cloud Turning Point: Innovation Phase Begins

Excerpted from IBM Blog by Marie Wieck

In the past, when I’ve spoken to clients about cloud computing, those conversations were mostly with line-of-business managers focused on cutting costs and getting new, customer-facing applications up and running quickly.

In the past, when I’ve spoken to clients about cloud computing, those conversations were mostly with line-of-business managers focused on cutting costs and getting new, customer-facing applications up and running quickly.

Today, the tide has turned, as chief executives and other line leaders want to know how cloud computing can help them transform their entire business, create new models and spur innovation.

While much of the attention in cloud has been focused on public infrastructure deployments, they are only part of the overall story.

Now those conversations reflect an intense interest in digital transformation through open, industry-specific hybrid cloud solutions that deliver higher value with analytics and cognitive capabilities.

Overwhelmingly, what we are hearing from clients and the market is the need for a clear path to extend the significant IT investments they have made by leveraging new, innovative cloud services, while ensuring their data is protected.

With hybrid cloud, business leaders don’t have to rip out and replace existing systems; they can focus on elevating their business value… Read More

The Changing Face of Hybrid Clouds

Excerpted from IT Business Edge Report by Arthur Cole

The hybrid cloud is considered to be the “safe zone” between the rigidity and poor scalability of private resources and the lack of control in the public domain.

But while it was always expected that hybrids would one day morph into a seamlessly integrated, broadly distributed data ecosystem, that vision is starting to look less feasible, and less desirable, as experience with real cloud architectures grows.

In a recent post on Forbes, Moor Insights & Strategy analyst John Fruehe describes the “hybrid cloud dilemma” in pretty stark terms.

He says that from both a security and logistics standpoint, a fully integrated hybrid cloud is proving extremely hard to implement.

Rather, current thinking in IT circles is starting to favor a “hybrid cloud environment” in which data and resources may be shared across multiple domains and providers, but individual compute environments will exist in only one.

So rather than try to craft a single computing architecture that follows data wherever it goes, the enterprise would do better to focus… Read More

End-To-End Resiliency in Hybrid Cloud World

Excerpted from Forbes Report by Hugo Moreno

Modern IT operations are undergoing upheaval, brought on by a perfect storm of internal and external forces.

While traditional IT services once ran solely from central data centers designed for client/server architectures or mainframes, many IT departments are now turning to hybrid cloud – a blend of traditional and cloud services – for greater agility and flexibility to meet changing business requirements.

These valuable resources also provide reliable platforms for running emerging technologies, such as cognitive computing, sophisticated analytics and a new generation of mobile applications.

This approach is catching fire: the technology researcher IDC predicts that more than 80% of IT organizations will commit to hybrid architectures by 2017.

A recent Forbes Insights report, “The Need to Bring a Paradigm Shift in Business Resiliency,” sponsored by IBM, examines the implications of these developments.

With hybrid cloud architectures as a foundation, enterprise workloads can originate from a wide range of sources… Read More

The Return of Fragmented Computing?

Excerpted from Virtualization Review Report by Dan Kusnetzky

Recently, I spoke to the folks at Kaleao about the company’s “hyperconverged” ARM-based servers and server appliances.

While I found the discussion and the products the company is offering quite intriguing, I couldn’t help but see this announcement within a larger context.

I guess that’s the burden of being a software archaeologist. Our discussion focused on today’s market and the desire of many enterprises to swing the pendulum back from a complex, overly distributed computing environment and re-converge computing functions on an inexpensive, but very powerful and scalable computing environment.

The company described how it looked at the same problems everyone sees, but tried to think differently about possible solutions.

The company decided that it would start with a clean sheet of paper and build a computing environment based upon ARM processors and use the best of today’s massively parallel hardware and software designs.

Here’s how the company describes its offering, KMAX… Read More

Edge Computing: New Revenue Opportunity

Excerpted from MSPmentor Blog by Marvin Blough

Edge computing may be the next great opportunity for MSPs.

Edge computing may be the next great opportunity for MSPs.

As customer demand for cloud services increases and the Internet of Things (IoT) gains traction, the need for servers that operate at the edge of the network is going to explode.

As such, it is bound to create a vast new market for MSPs to apply their expertise with remote service delivery.

Edge computing wouldn’t even be a thing if the cloud didn’t exist.

Here’s why: As businesses push more and more of their computing to cloud infrastructures, they are starting to come to terms with certain limitations.

Those limitations can seriously interfere with a company’s plans to leverage data analytics and execute IoT implementations.

The primary issue with relying on cloud infrastructure has to do with latency, which tends to increase based on the distance that data has to travel.

Accessing a cloud infrastructure hundreds or thousands of miles away is perfectly acceptable for certain kinds of processing and storage… Read More

Power of Machine Learning & AI in Data Centers

Excerpted from CloudTech Report by Richard Jenkins

Data is everywhere – masses of it.

Data is everywhere – masses of it.

And it’s helping businesses to make better decisions across departments.

Marketing can utilize data to discover the effectiveness of email campaigns, finance can analyze past trends to make predictions and projections for the future, and sales can target their follow-up with detailed information on prospective customers.

But data is only useful when business tools transform it into valuable information.

Data intelligence through algorithms and analytics make business data relatable.

The most advanced solutions require enormous amounts of data to be able to offer accurate insight to users.

As a result, many solutions are cloud based, as most businesses do not have the IT capacity or budget to store this amount of information.

So where does all this data reside? The data center… Read More

Enterprises Escaping Mainframe Island?

Excerpted from Network World Report by Dan Kusnetzky

From time to time, a vendor’s PR rep sends me a note about the “problem” that is caused by mainframe systems being at the hub of enterprise computing.

From time to time, a vendor’s PR rep sends me a note about the “problem” that is caused by mainframe systems being at the hub of enterprise computing.

In reality, these systems often offer more integrated processing power, larger memory capacity and more efficient database operations than a distributed, x86-based solution.

The most recent pitch I received included this sentence: “How the dusty old legacy mainframe holds back cloud initiatives… and how it can be modernized.”

Part of the reason mainframes won’t die is that often they simply cost less to operate when all of the costs of ownership and workload operations are considered.

While I was with industry research firm IDC (I was IDC’s vice president of system software research for a time), my team would conduct extensive cost-of-ownership studies to determine the relative costs of a workload or an IT solution hosted on different platforms.

Surveys would be conducted to learn the actual costs incurred by companies and then an overall cost of ownership… Read More

Roles of Cloud & Fog in IoT Revolution

Excerpted from Business Insider Report by Andrew Meola

The Internet of Things (IoT) is starting to transform how we live our lives, but all of the added convenience and increased efficiency comes at a cost.

The Internet of Things (IoT) is starting to transform how we live our lives, but all of the added convenience and increased efficiency comes at a cost.

The IoT is generating an unprecedented amount of data, which in turn puts a tremendous strain on the Internet infrastructure. As a result, companies are working to find ways to alleviate that pressure and solve the data problem.

Cloud computing will be a major part of that, especially by making all of the connected devices work together.

But there are some significant differences between cloud computing and the Internet of Things that will play out in the coming years as we generate more and more data.

Below, we’ve outlined the differences between the cloud and the IoT, detailed the role of cloud computing in the IoT, and explained “fog computing,” the next evolution of cloud computing.

Cloud computing, often called simply “the cloud,” involves delivering data, applications, photos, videos, and more over the Internet to data centers. IBM has helpfully broken down cloud computing into six different categories… Read More

Coming Events of Interest

Security of Things World USA — November 3rd-4th in San Diego, CA. SoTWUSA has been designed to help you find pragmatic solutions to the most common security threats facing the IoT.

Rethink! Cloudonomic Minds — November 21st-22nd in London, England. R!CM will cover how IoT is impacting cloud strategies and how to take advantage of these two key technology trends.

Government Video Expo — December 6th-8th in Washington, DC. GVE is the East Coast’s largest technology event for broadcast and video professionals, featuring a full exhibit floor, numerous training options, free seminars, keynotes, panel discussions, networking opportunities, and more.

CES 2017 — January 5th-8th in Las Vegas, NV. More than 3,800 exhibiting companies showcasing innovation across 2.4 million net square feet, representing 24 product categories.

Industry of Things World USA — February 20th-21st in San Diego, CA. Global leaders will gather to focus on monetization of the Internet of Things (IoT) in an industrial setting.

fintech:CODE — March 16th-17th in London, UK. A new international knowledge exchange platform bringing together all DevOps, IT, and IoT stakeholders who play an active role in the finance and tech scene. Key topics include software development, technical challenges for DevOps, DevOps security, cloud technologies and SaaS.

retail:CODE — March 16th-17th in London, UK. 20 real-life case studies, state-of-the-art keynotes, and interactive World Café sessions, 35+ influential speakers will share their knowledge at the intersection of the retail and technology sectors.